Development Tools for Embedded Vision

ENCOMPASSING MOST OF THE STANDARD ARSENAL USED FOR DEVELOPING REAL-TIME EMBEDDED PROCESSOR SYSTEMS

The software tools (compilers, debuggers, operating systems, libraries, etc.) encompass most of the standard arsenal used for developing real-time embedded processor systems, while adding in specialized vision libraries and possibly vendor-specific development tools for software development. On the hardware side, the requirements will depend on the application space, since the designer may need equipment for monitoring and testing real-time video data. Most of these hardware development tools are already used for other types of video system design.

Both general-purpose and vender-specific tools

Many vendors of vision devices use integrated CPUs that are based on the same instruction set (ARM, x86, etc), allowing a common set of development tools for software development. However, even though the base instruction set is the same, each CPU vendor integrates a different set of peripherals that have unique software interface requirements. In addition, most vendors accelerate the CPU with specialized computing devices (GPUs, DSPs, FPGAs, etc.) This extended CPU programming model requires a customized version of standard development tools. Most CPU vendors develop their own optimized software tool chain, while also working with 3rd-party software tool suppliers to make sure that the CPU components are broadly supported.

Heterogeneous software development in an integrated development environment

Since vision applications often require a mix of processing architectures, the development tools become more complicated and must handle multiple instruction sets and additional system debugging challenges. Most vendors provide a suite of tools that integrate development tasks into a single interface for the developer, simplifying software development and testing.

OpenMV Demonstration of Its New N6 and AE3 Low Power Python Programmable AI Cameras and Other Products

Kwabena Agyeman, President and Co-founder of OpenMV, demonstrates the company’s latest edge AI and vision technologies and products at the March 2025 Edge AI and Vision Alliance Forum. Specifically, Agyeman demonstrates the company’s new N6 and AE3 low power Python programmable AI cameras, along with the FLIR BOSON thermal camera and Prophesee GENX320 event camera.

RGo Robotics Implements Vision-based Perception Engine on Qualcomm SoCs for Robotics Market

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Mobile robotics developers equip their machines to behave autonomously in the real world by generating facility maps, localizing within them and understanding the geometry of their surroundings. Machines like autonomous mobile robots (AMR), automated guided vehicles (AGV)

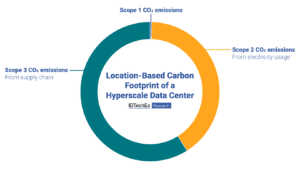

Key Insights from Data Centre World 2025: Sustainability and AI

Scope 2 power-based emissions and Scope 3 supply chain emissions make the biggest contribution to the data center’s carbon footprint. IDTechEx’s Sustainability for Data Centers report explores which technologies can reduce these emissions. Major data center players converged in London, UK, in the middle of March for the 2025 iteration of Data Centre World. Co-located

Explaining Tokens — The Language and Currency of AI

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Under the hood of every AI application are algorithms that churn through data in their own language, one based on a vocabulary of tokens. Tokens are tiny units of data that come from breaking down bigger chunks

The Silent Threat to AI Initiatives

This blog post was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. The single, most common reason why most AI projects fail is not technical. Having spent almost 15 years in the AI & data services space, I can confidently say that the primary cause of failure for AI

Upcoming In-person Event from Andes Technology Explores the RISC-V Ecosystem

On April 29, 2025 from 9:00 AM to 6:00 PM PT, Alliance Member company Andes Technology will deliver the RISC-V CON Silicon Valley event at the DoubleTree Hotel by Hilton in San Jose, CA. Jeff Bier, Founder of the Edge AI and Vision Alliance, will be one of the invited guest speakers. From the event

Exploring the COCO Dataset

This article was originally published at 3LC’s website. It is reprinted here with the permission of 3LC. The COCO dataset is a cornerstone of modern object detection, shaping models used in self-driving cars, robotics, and beyond. But what happens when we take a closer look? By examining annotations, embeddings, and dataset patterns at a granular

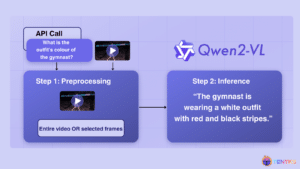

Video Understanding: Qwen2-VL, An Expert Vision-language Model

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. Qwen2-VL, an advanced vision language model built on Qwen2 [1], sets new benchmarks in image comprehension across varied resolutions and ratios, while also tackling extended video content. Though Qwen2-V excels at many fronts, this article explores the model’s