Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

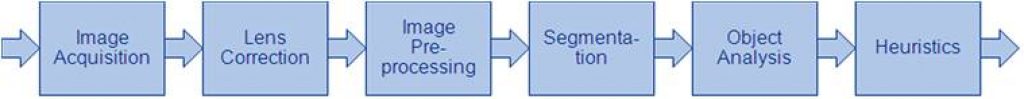

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

NVIDIA Announces Major Release of Cosmos World Foundation Models and Physical AI Data Tools

New Models Enable Prediction, Controllable World Generation and Reasoning for Physical AI Two New Blueprints Deliver Massive Physical AI Synthetic Data Generation for Robot and Autonomous Vehicle Post-Training 1X, Agility Robotics, Figure AI, Skild AI Among Early Adopters March 18, 2025—GTC—NVIDIA today announced a major release of new NVIDIA Cosmos™ world foundation models (WFMs), introducing

NVIDIA Unveils Open Physical AI Dataset to Advance Robotics and Autonomous Vehicle Development

Expected to become the world’s largest such dataset, the initial release of standardized synthetic data is now available to robotics developers as open source. Teaching autonomous robots and vehicles how to interact with the physical world requires vast amounts of high-quality data. To give researchers and developers a head start, NVIDIA is releasing a massive,

NVIDIA Announces DGX Spark and DGX Station Personal AI Computers

Powered by NVIDIA Grace Blackwell, Desktop Supercomputers Place Accelerated AI in the Hands of Developers, Researchers and Data Scientists; Systems Coming From Leading Computer Makers Including ASUS, Dell Technologies, HP and Lenovo March 18, 2025—GTC—NVIDIA today unveiled NVIDIA DGX™ personal AI supercomputers powered by the NVIDIA Grace Blackwell platform. DGX Spark — formerly Project DIGITS

Build Real-time Multimodal XR Apps with NVIDIA AI Blueprint for Video Search and Summarization

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. With the recent advancements in generative AI and vision foundational models, VLMs present a new wave of visual computing wherein the models are capable of highly sophisticated perception and deep contextual understanding. These intelligent solutions offer a promising

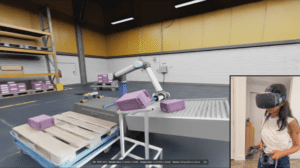

Optimizing Edge AI for Effective Real-time Decision Making in Robotics

This blog post was originally published at Geisel Software’s website. It is reprinted here with the permission of Geisel Software. Optimizing Edge AI Key Takeaways Instant Decisions, Real-World Impact: Edge AI empowers robots to react in milliseconds, enabling life-saving actions in critical scenarios like autonomous vehicle collision avoidance and rapid search-and-rescue missions. Unshakeable Reliability, Unbreachable

Passenger Car ADAS Market 2025-2045: Technology, Market Analysis, and Forecasts

For more information, visit https://www.idtechex.com/en/research-report/passenger-car-adas-market-2025-2045-technology-market-analysis-and-forecasts/1080. Global L2+/L3 feature adoption will exceed 50% by 2035. Over the past few years, Advanced Driver Assistance Systems (ADAS) have become a core competitive factor in the passenger vehicles market. In particular, “Level 2+” has emerged as a term describing advanced Level 2 ADAS with more sophisticated capabilities, such as

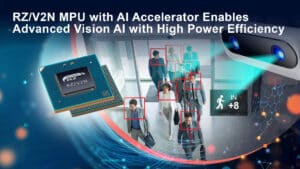

Renesas Extends Mid-class AI Processor Line-up with RZ/V2N Integrating DRP-AI Accelerator for Smart Factories and Intelligent Cities of Tomorrow

Realizing Advanced Endpoint Vision AI While Reducing System Size and Cost with a Power-Efficient MPU that Eliminates the Need for Cooling Fans NUREMBERG, Germany and TOKYO, Japan ― Renesas Electronics Corporation (TSE:6723), a premier supplier of advanced semiconductor solutions, today expanded its RZ/V Series of microprocessors (MPUs) with a new device that targets the high-volume

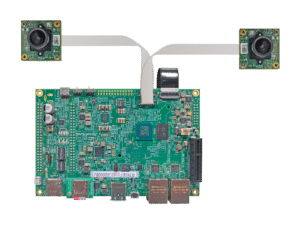

e-con Systems Expands Its Camera Portfolio for the Renesas RZ/V2N MPU in AI-powered Vision Applications

California & Chennai (March 11, 2025): e-con Systems®, a leading provider of embedded vision solutions, continues its strong collaboration with Renesas by supporting the latest high performance RZ/V2N processor, launching at embedded world 2025 in Nuremberg, Germany. Having previously delivered advanced multi-camera solutions for the Renesas RZ/V2H processor, this collaboration further reinforces that e-con Systems

Building a Simple VLM-based Multimodal Information Retrieval System with NVIDIA NIM

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. In today’s data-driven world, the ability to retrieve accurate information from even modest amounts of data is vital for developers seeking streamlined, effective solutions for quick deployments, prototyping, or experimentation. One of the key challenges in information retrieval

Allegro DVT Launches its First AI-based Neural Video Processing IP

Grenoble, France — March 11, 2025 — Allegro DVT, a leader in video processing semiconductor solutions, is excited to announce the launch of its latest innovation, its first AI-based Neural Video Processing NVP300 IP. This groundbreaking product marks Allegro DVT’s commitment to embrace the AI revolution and push video quality to the next level, leveraging

AMD Unveils 5th Gen AMD EPYC Embedded Processors Delivering Leadership Performance, Efficiency and Long Product Lifecycles for Networking, Storage, and Industrial Edge Markets

High-performance “Zen 5” architecture offers server-grade performance and efficiency combined with purpose-built features for optimized product longevity and system resiliency Cisco and IBM among the first technology partners to adopt 5th Gen AMD EPYC Embedded CPUs to power next generation platforms NUREMBERG, Germany, March 11, 2025 (GLOBE NEWSWIRE) — AMD (NASDAQ: AMD) today announced the expansion

Altera to Showcase the Latest Programmable Innovations at Embedded World 2025

Sandra Rivera, CEO of Altera, will explore the transformative impact of AI at the Edge at Embedded World 2025. In her opening keynote, Sandra will discuss how FPGAs are revolutionizing AI implementation by offering flexible, programmable hardware and software solutions. NUREMBERG, Germany, Mar. 10, 2025 – Today at Embedded World, Altera Corporation, a leader in

Qualcomm at Embedded World: Accelerating Digital Transformation with Edge AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. An essential partner to the embedded community, Qualcomm Technologies, Inc. strengthens its leadership in intelligent computing with several key announcements The AI revolution is sparking a wave of innovation in the embedded community, spawning a flurry of

The Rise of Chiplets and Simplified Interconnectivity

Smaller and more compact designs with simplified structures can be achieved with chiplet technology. IDTechEx‘s report, “Chiplet Technology 2025-2035: Technology, Opportunities, Applications“, unpacks the benefits and challenges of developing chiplet technology compared with competing semiconductor designs and their best-suited applications. Chiplet characteristics Chiplets allow for GPU, CPU, and IO components to become miniaturized to suit

Synaptics Extends Edge AI Portfolio with High-performance Adaptive MCUs for Multimodal Context-aware Computing

Featuring three power levels, the SR-Series MCUs scale Astra AI-Native hardware and open-source software to accelerate the development of low-power, cognitive IoT devices. Nuremberg, Germany, March 10, 2025 — Synaptics® Incorporated (Nasdaq: SYNA) has extended its award-winning Synaptics AstraTM AI-Native platform with the SR-Series high-performance adaptive microcontroller units (MCUs) for scalable context-aware Edge AI. The

Axelera AI Secures Up to €61.6 Million Grant to Develop Scalable AI Chiplet for High-performance Computing

March 6, 2025 – Axelera AI, the leading provider of purpose-built AI hardware acceleration technology for generative AI and computer vision inference at the edge, unveiled Titania™, a high-performance, energy efficient and scalable AI inference chiplet. The development of this chiplet builds on Axelera AI’s innovative approach to Digital In-Memory Computing (D-IMC) architecture, which provides

Unveiling the Qualcomm Dragonwing Brand Portfolio: Solutions For a New Era of Industrial Innovation

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Our mission is to deliver intelligent computing everywhere. We have an amazing suite of products, and while you may be familiar with the Snapdragon brand portfolio, you may not know that we have a whole suite of

How e-con Systems’ TintE ISP IP Core Increases the Efficiency of Embedded Vision Applications

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. e-con Systems has developed TintE™, a ready to deploy ISP IP core engineered to enhance image quality in camera systems. Built to deliver high performance on leading FPGA platforms, it accelerates real-time image processing with

MIPS Drives Real-time Intelligence into Physical AI Platforms

The new MIPS Atlas product suite delivers cutting-edge compute subsystems that empower autonomous edge solutions to sense, think and act with precision, driving innovation across the growing physical AI opportunity in industrial robotics and autonomous platform markets. SAN JOSE, CA. – March 4th, 2025 – MIPS, the world’s leading supplier of compute subsystems for autonomous

Vision Language Model Prompt Engineering Guide for Image and Video Understanding

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Vision language models (VLMs) are evolving at a breakneck speed. In 2020, the first VLMs revolutionized the generative AI landscape by bringing visual understanding to large language models (LLMs) through the use of a vision encoder. These