Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

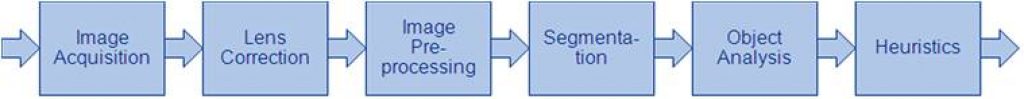

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

AMD Unveils Next-generation AMD RDNA 4 Architecture with the Launch of AMD Radeon RX 9000 Series Graphics Cards

The new AMD Radeon™ RX 9000 Series graphics cards deliver enthusiast-level gaming experiences supercharged by AI SANTA CLARA, Calif., Feb. 28, 2025 (GLOBE NEWSWIRE) — AMD (NASDAQ: AMD) today unveiled the highly-anticipated AMD RDNA™ 4 graphics architecture with the launch of the AMD Radeon™ RX 9070 XT and RX 9070 graphics cards as a part

Arm Drives Next-generation Performance for IoT with World’s First Armv9 Edge AI Platform

News highlights: World’s first Armv9 edge AI platform, optimized for IoT with the new Cortex-A320 CPU and Ethos-U85 NPU, enables on-device AI models over one billion parameters – earning support from industry leaders, including AWS, Siemens and Renesas. The ultra-efficient Arm Cortex-A320 leverages Armv9 to enhance efficiency, performance, and security in IoT markets, driving advancements

How FPGAs Are Advancing Next-generation Embedded Vision Applications (Part 2)

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Field-programmable Gate Arrays (FPGAs) help embedded vision applications meet complex requirements. Discover why FPGAs power next-gen systems with computing power, flexibility, and energy awareness, and learn about their future impact. Embedded vision is a huge

Andes Technology and proteanTecs Partner to Bring Performance and Reliability Monitoring to RISC-V Cores

proteanTecs’ on-chip monitoring successfully integrated into the AndesCore™ AX45MPV RISC-V multicore vector processor TAIPEI, Taiwan and HAIFA, Israel — Feb 25, 2025 — Andes Technology Corporation (TWSE: 6533), a leading supplier of RISC-V processor IP, and proteanTecs, a global leader of health and performance monitoring solutions for advanced electronics, today announced a strategic partnership. This collaboration enables joint customers

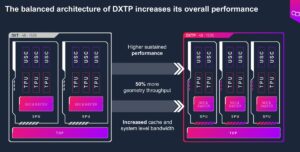

Imagination Takes Efficiency Up a Level with Latest D-series GPU IP

The Imagination DXTP GPU IP extends battery life when accelerating graphics and compute workloads on mobile and other power-constrained devices. 25 February 2025 – Today Imagination Technologies announces its latest GPU IP, Imagination DXTP, which sets a new standard for the efficient acceleration of graphics and compute workloads on smartphones and other power-constrained devices. Thanks

New AI Model Offers Cellular-level View of Cancerous Tumors

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Researchers studying cancer unveiled a new AI model that provides cellular-level mapping and visualizations of cancer cells, which scientists hope can shed light on how—and why—certain inter-cellular relationships triggers cancers to grow. BioTuring, a San Diego-based startup,

D3 Embedded Partners with Silicon Highway to Provide Rugged Camera Solutions to Europe

Rochester, NY – February 12, 2025 – D3 Embedded today announced its partnership with Silicon Highway, a leading European distribution company specializing in embedded AI edge solutions, to accelerate the delivery of high-performance rugged cameras to the European market. This partnership will allow D3 Embedded to leverage Silicon Highway’s local expertise and knowledge of the

Intel Unveils Leadership AI and Networking Solutions with Xeon 6 Processors

Intel completes Xeon 6 portfolio of processors, delivering a CPU for the broadest set of workloads in the industry. NEWS HIGHLIGHTS Intel launches new Intel® Xeon® 6 processors with Performance-cores, offering industry-leading performance across data center workloads and up to 2x higher performance in AI processing1. New Xeon 6 processors for network and edge applications

Vision Components and Phytec Announce Partnership: MIPI Cameras and Processor Boards Perfectly Integrated

Mainz, February 20, 2025 – Phytec and Vision Components have agreed to collaborate on the integration of cameras into embedded systems. The partnership enables users to easily operate all of VC’s 50+ MIPI cameras with Phytec embedded imaging processor boards. The corresponding drivers are already included in the Linux BSP of the Phytec modules. As

DeepSeek-R1 1.5B on SiMa.ai for Less Than 10 Watts

February 18, 2025 09:00 AM Eastern Standard Time–SAN JOSE, Calif.–(BUSINESS WIRE)–SiMa.ai, the software-centric, embedded edge machine learning system-on-chip (MLSoC) company, today announced the successful implementation of DeepSeek-R1-Distill-Qwen-1.5B on its ONE Platform for Edge AI, achieving breakthrough performance within an unprecedented power envelope of under 10 watts. This implementation marks a significant advancement in efficient, secure

The Critical Role of FPGAs in Modern Embedded Vision Systems (Part 1)

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. FPGAs are rapidly becoming a best-fit solution for meeting the unique demands of high-performing embedded vision systems. Get insights into what FPGAs are, the challenges they solve, and how they offer unmatched benefits for interface

From Brain to Binary: Can Neuro-inspired Research Make CPUs the Future of AI Inference?

This article was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. In the ever-evolving landscape of AI, the demand for powerful Large Language Models (LLMs) has surged. This has led to an unrelenting thirst for GPUs and a shortage that causes headaches for many organizations. But what if there

$1 Trillion by 2030: The Semiconductor Devices Industry is On Track

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Entering a new growth cycle, the surge in the semiconductor industry is driven by the rise of generative AI processors and HBM[1] OUTLINE Market evolution: with a $672 billion market in 2024[2],

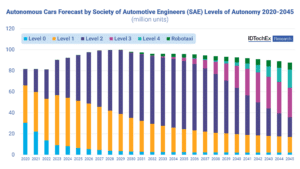

Autonomous Cars are Leveling Up: Exploring Vehicle Autonomy

When the Society of Automotive Engineers released their definitions of varying levels of automation from level 0 to level 5, it became easier to define and distinguish between the many capabilities and advancements of autonomous vehicles. Level 0 describes an older model of vehicle with no automated features, while level 5 describes a future ideal

Introducing Qualcomm Custom-built AI Models, Now Available on Qualcomm AI Hub

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. We’re thrilled to announce that five custom-built computer vision (CV) models are now available on Qualcomm AI Hub! Qualcomm Technologies’ custom-built models were developed by the Qualcomm R&D team, optimized for our platforms and designed with end-user applications

NXP Agrees to Acquire Edge AI Pioneer Kinara to Redefine the Intelligent Edge

Enhances NXP’s leading processing portfolio with cutting edge NPUs and AI software, driving intelligent system solutions across the industrial and automotive edge markets. Delivers high-performance neural network processing with advanced generative AI to create transformative edge use cases. Establishes a scalable platform for AI-powered edge systems, combining NXP’s broad portfolio of processing, connectivity, security, and

New AI SDKs and Tools Released for NVIDIA Blackwell GeForce RTX 50 Series GPUs

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA recently announced a new generation of PC GPUs—the GeForce RTX 50 Series—alongside new AI-powered SDKs and tools for developers. Powered by the NVIDIA Blackwell architecture, fifth-generation Tensor Cores and fourth-generation RT Cores, the GeForce RTX 50

Vision Components Introduces MIPI Camera Modules with Integrated Image Pre-processing

Ettlingen, February 6, 2025. Vision Components introduces VC MIPI Cameras with onboard image pre-processing at embedded world (March 11-13, 2025, Nuremberg). The tiny camera modules can detect and extract barcodes, objects, edges and laser lines as well as perform blob analyses and color conversions. VC will also show a new version of the FPGA accelerator

Qualcomm AI Research Makes Diverse Datasets Available to Advance Machine Learning Research

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Qualcomm AI Research has published a variety of datasets for research use. These datasets can be used to train models in the kinds of applications most common to mobile computing, including: advanced driver assistance systems (ADAS), extended reality

Make Your Existing NVIDIA Jetson Orin Devices Faster with Super Mode

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. The NVIDIA Jetson Orin Nano Super Developer Kit, with its compact size and high-performance computing capabilities, is redefining generative AI for small edge devices. NVIDIA has dropped an exciting new update to their existing Jetson