Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

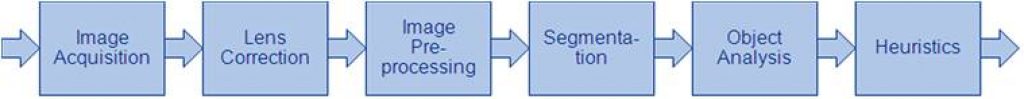

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

Advanced Packaging: How AI is Revolutionizing the Game

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The growth of the AI server market is driving the adoption of 2.5D/3D packaging technologies. 2.5D & 3D interconnect types for the high-end performance packaging market: Revenue is expected to grow with

STMicroelectronics and HighTec EDV-Systeme Collaborate for Safer Software-defined Vehicles

Where safety meets safety: ST’s Stellar MCUs certified to the highest level of risk management, ISO 26262 ASIL D, are now supported with the same safety level by HighTec’s Rust compiler Geneva, Switzerland and Saarbrücken, Germany, February 4, 2025 – STMicroelectronics (NYSE: STM), a global semiconductor leader serving customers across the spectrum of electronics applications,

Empowering Civil Construction with AI-driven Spatial Perception

This blog post was originally published at Au-Zone Technologies’ website. It is reprinted here with the permission of Au-Zone Technologies. Transforming Safety, Efficiency, and Automation in Construction Ecosystems In the rapidly evolving field of civil construction, AI-based spatial perception technologies are reshaping the way machinery operates in dynamic and unpredictable environments. These systems enable advanced

Can Money Buy AI Dominance?

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The announcements of the creation of Stargate last week as well as the revelation of DeepSeek-V3’s performance at a much lower cost than OpenAI and its competitors are both raising a lot

AI Chips Take Center Stage at CES

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The 2025 edition of the world’s largest consumer electronics conference showcased the latest semiconductors for artificial intelligence applications The CES 2025 conference in Las Vegas showcased the latest semiconductor innovations, offering some

Which AI Hardware Will Rise Above in the Wake of Competing AI Models?

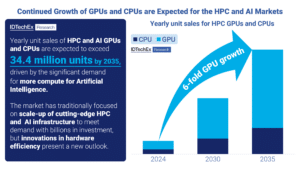

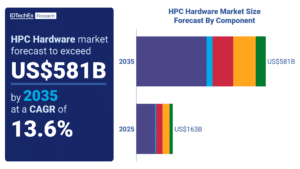

IDTechEx forecasts for yearly unit sales of HPC and AI GPUs and CPUs between 2024 and 2035. The grip of AI has continued to hold, with strong competition to develop more powerful AI models, active innovation in the semiconductor industry, and continued investment in the High-Performance Computing (HPC) data center market. Artificial Intelligence has been

Ambarella and Gauzy Harness Power of AI for Breakthroughs in Advanced Driver Assistance Systems (ADAS), Including Ford Trucks

Gauzy’s AI-powered Smart-Vision camera monitoring system (CMS) leverages Ambarella’s cutting-edge CVflow AI Systems-on-Chip (SoCs) to enhance road safety and redefine urban mobility NEW YORK and SANTA CLARA, Calif. – January 30, 2025 – Gauzy Ltd. (Nasdaq: GAUZ), a global leader in light and vision control technology, today announced that its strategic partnership with edge AI

How Qualcomm is Catalyzing Retail’s AI Revolution

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Retail is embracing innovation — we’ll be showing off examples of these game-changing experiences at NRF 2025 With more places to shop than ever, physical stores are turning to AI and technology for a competitive edge. That

Upgraded Sensor Board from STMicroelectronics Accelerates Plug-and-play Evaluation with ST MEMS Studio

New hardware integrates closely with convenient, graphical development environment Geneva, Switzerland, January 27, 2025 — Developing context-aware applications with MEMS sensors is faster, more powerful, and more flexible with ST’s latest-generation sensor evaluation board, the STEVAL-MKI109D. Now upgraded with an STM32H5 microcontroller, USB-C connector, and extra digital interfaces including I3C for flexible communication , the

Harnessing the Power of LLM Models on Arm CPUs for Edge Devices

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. In recent years, the field of machine learning has witnessed significant advancements, particularly with the development of Large Language Models (LLMs) and image generation models. Traditionally, these models have relied on powerful cloud-based infrastructures to deliver impressive

AI On the Road: Why AI-powered Cars are the Future

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. AI transforms your driving experience in unexpected ways as showcased by Qualcomm Technologies collaborations As automotive technology rapidly advances, consumers are looking for vehicles that deliver AI-enhanced experiences through conversational voice assistants and sophisticated user interfaces. Automotive

CPUs, GPUs, and AI: Exploring High-performance Computing Hardware

IDTechEx‘s latest report, “Hardware for HPC and AI 2025-2035: Technologies, Markets, Forecasts“, provides data to show that the use of graphics processing units (GPUs) within high performance computing (HPC) has been increasingly adopted since the introduction of generative AI and large language models, as well as advanced memory, storage, networking, and cooling technologies. Drivers for

Visual Intelligence at the Edge

This blog post was originally published at Au-Zone Technologies’ website. It is reprinted here with the permission of Au-Zone Technologies. Optimizing AI-based video telematics deployments on constrained SoCs platforms The demand for advanced video telematics systems is growing rapidly as companies seek to enhance road safety, improve operational efficiency, and manage liability costs with AI-powered

NVIDIA JetPack 6.2 Brings Super Mode to NVIDIA Jetson Orin Nano and Jetson Orin NX Modules

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The introduction of the NVIDIA Jetson Orin Nano Super Developer Kit sparked a new age of generative AI for small edge devices. The new Super Mode delivered an unprecedented generative AI performance boost of up to 1.7x

Edge Intelligence and Interoperability are the Key Components Driving the Next Chapter of the Smart Home

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The smart home industry is on the brink of a significant leap forward, fueled by generative AI and edge capabilities The smart home is evolving to include advanced capabilities, such as digital assistants that interact like friends

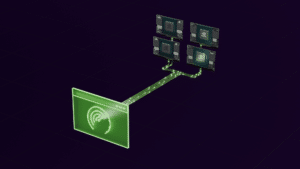

Accelerate Custom Video Foundation Model Pipelines with New NVIDIA NeMo Framework Capabilities

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Generative AI has evolved from text-based models to multimodal models, with a recent expansion into video, opening up new potential uses across various industries. Video models can create new experiences for users or simulate scenarios for training

HPC Hardware Market to Grow at 13.6% CAGR to 2035

HPC systems, including supercomputers, outclass all other classes of computing in terms of calculation speed by parallelizing processing over many processors. HPC has long been an integral tool across critical industries, from facilitating engineering modeling to predicting the weather. The AI boom has intensified development in the sector, growing the capabilities of hardware technologies, including

How AI On the Edge Fuels the 7 Biggest Consumer Tech Trends of 2025

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. From more on-device AI features on your phone to the future of cars, 2025 is shaping up to be a big year Over the last two years, generative AI (GenAI) has shaken up, well, everything. Heading into

BrainChip Brings Neuromorphic Capabilities to M.2 Form Factor

Laguna Hills, Calif. – January 8th, 2025 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event-based, neuromorphic AI, today announced the availability of its Akida™ advanced neural networking processor on the M.2 form factor, enabling a low-cost, high-speed and low-power consumption option for

Intel Accelerates Software-defined Innovation with Whole-vehicle Approach

At CES 2025, Intel unveils new adaptive control solution, next-gen discrete graphics and AWS virtual development environment. What’s New: At CES, Intel unveiled an expanded product portfolio and new partnerships designed to accelerate automakers’ transitions to electric and software-defined vehicles (SDVs). Intel now offers a whole-vehicle platform, including high-performance compute, discrete graphics, artificial intelligence (AI), power