Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

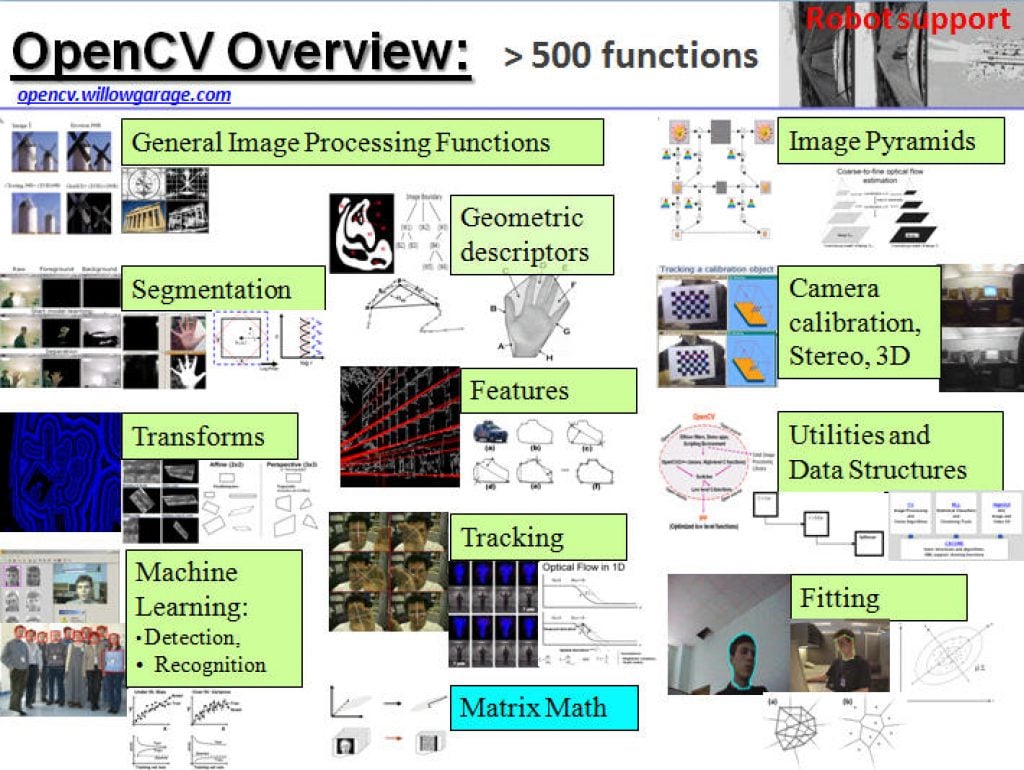

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

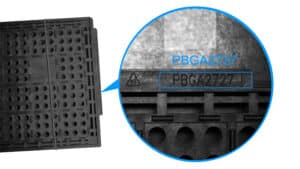

Efficient Optical Character Recognition (OCR)

This blog post was originally published at Basler’s website. It is reprinted here with the permission of Basler. Discover our easy and efficient way of implementing an OCR solution, with chip and IC trays as the particular use case example, where challenging characters are deciphered correctly every time. The solution overcomes low contrast and variable

ELD: Introducing a New Open-source Embedded Linker Tool for Embedded Systems

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. At Qualcomm Technologies, Inc., embedded linkers play a crucial role in our software stack. While many linkers work well on traditional platforms, they often fall short when it comes to embedded systems. Embedded projects have unique requirements,

North America and Europe to Account for 17 Million Video Telematics Systems in Use by 2029

For more information, visit https://www.berginsight.com/the-video-telematics-market. The integration of cameras to enable various video-based solutions in commercial vehicle environments is one of the most apparent trends in the fleet telematics sector today. Berg Insight’s definition of video telematics includes a broad range of camera-based solutions deployed in commercial vehicle fleets either as standalone applications or as

Using AI to Better Understand the Ocean

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Humans know more about deep space than we know about Earth’s deepest oceans. But scientists have plans to change that—with the help of AI. “We have better maps of Mars than we do of our own exclusive

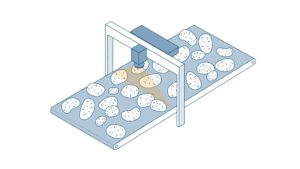

SWIR Vision Systems in Agricultural Production

This blog post was originally published at Basler’s website. It is reprinted here with the permission of Basler. Improved produce inspection through short-wave infrared light Ensuring the quality of fruits and vegetables such as apples or potatoes is crucial to meet market standards and consumer expectations. Traditional inspection methods are often based only on visual

Qualcomm Dragonwing Intelligent Video Suite Modernizes Video Management with Generative AI at Its Core

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Video cameras generate a lot of data. Companies that use a video management system (VMS) are left wanting to get more value out of all the video data they generate, enabling them to take the actions that

Rockets to Retail: Intel Core Ultra Delivers Edge AI for Video Management

At Intel Vision, Network Optix debuts natural language prompt prototype to redefine video management, offering industries faster AI-driven insights and efficiency. On the surface, aerospace manufacturers, shopping malls, universities, police departments and automakers might not have a lot in common. But they each collectively use and manage hundreds to thousands of video cameras across their

LLM Benchmarking: Fundamental Concepts

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The past few years have witnessed the rise in popularity of generative AI and large language models (LLMs), as part of a broad AI revolution. As LLM-based applications are rolled out across enterprises, there is a need to

Enterprise AI: Insights from Industry Leaders

This blog post was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. The potential of AI continues to captivate businesses, yet the reality of implementation often proves challenging. In our latest event, we explored the complex landscape of enterprise AI Adoption. Our expert panel cut through the hype, offering

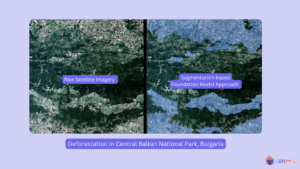

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 2)

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2, we explore how Foundation Models can be leveraged to track deforestation patterns. Building upon the insights from our Sentinel-2 pipeline and Central Balkan case study, we dive into the revolution that foundation models have

High-performance AI In House: Qualcomm Dragonwing AI On-prem Appliance Solution and Qualcomm AI Inference Suite

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The Qualcomm Dragonwing AI On-Prem Appliance Solution pairs with the software and services in the Qualcomm AI Inference Suite for AI inference that spans from near-edge to cloud. Together, they allow your small-to-medium business, enterprise or industrial

Qualcomm Expands Generative AI Capabilities With Acquisition of VinAI Division

Highlights: Acquisition will strengthen Qualcomm’s generative AI research and development capabilities and expedite the creation of advanced AI solutions for products like smartphones, PCs, software-defined vehicles, and more Dr. Hung Bui, VinAI’s founder and CEO, will join Qualcomm Apr 1, 2025 – SAN DIEGO & HANOI, VIETNAM – Qualcomm today announced the acquisition of MovianAI Artificial

DeepSeek: Stop the Panic

DeepSeek is a precursor of many more disruptors coming in the AI world. Its importance may be overblown compared with what comes next. What’s at stake: DeepSeek nearly sank Nvidia and other AI model players. Except DeepSeek itself is barely known by anyone, its true story still a mystery, and the likely impact on the

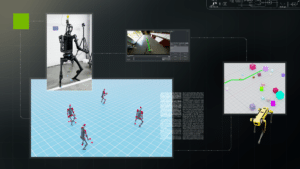

R²D²: Advancing Robot Mobility and Whole-body Control with Novel Workflows and AI Foundation Models from NVIDIA Research

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Welcome to the first edition of the NVIDIA Robotics Research and Development Digest (R2D2). This technical blog series will give developers and researchers deeper insight and access to the latest physical AI and robotics research breakthroughs across

Sony Semiconductor Demonstration of AITRIOS Vision AI at the Extreme Edge Made Simple

Armaghan Ebrahimi, Senior Technical Product Manager, and Zachary Li, Product and Business Development Manager, both of Sony Semiconductor, demonstrates the company’s latest edge AI and vision technologies and products at the March 2025 Edge AI and Vision Alliance Forum. Specifically, Ebrahimi and Li demonstration the Raspberry Pi AI Camera, powered by Sony’s IMX500 smart sensor

LLMOps Unpacked: The Operational Complexities of LLMs

This blog post was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. Incorporating a Large Language Model (LLM) into a commercial product is a complex endeavor, far beyond the simplicity of prototyping. As Machine Learning and Generative AI (GenAI) evolve, so does the need for specialized operational practices, leading