Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

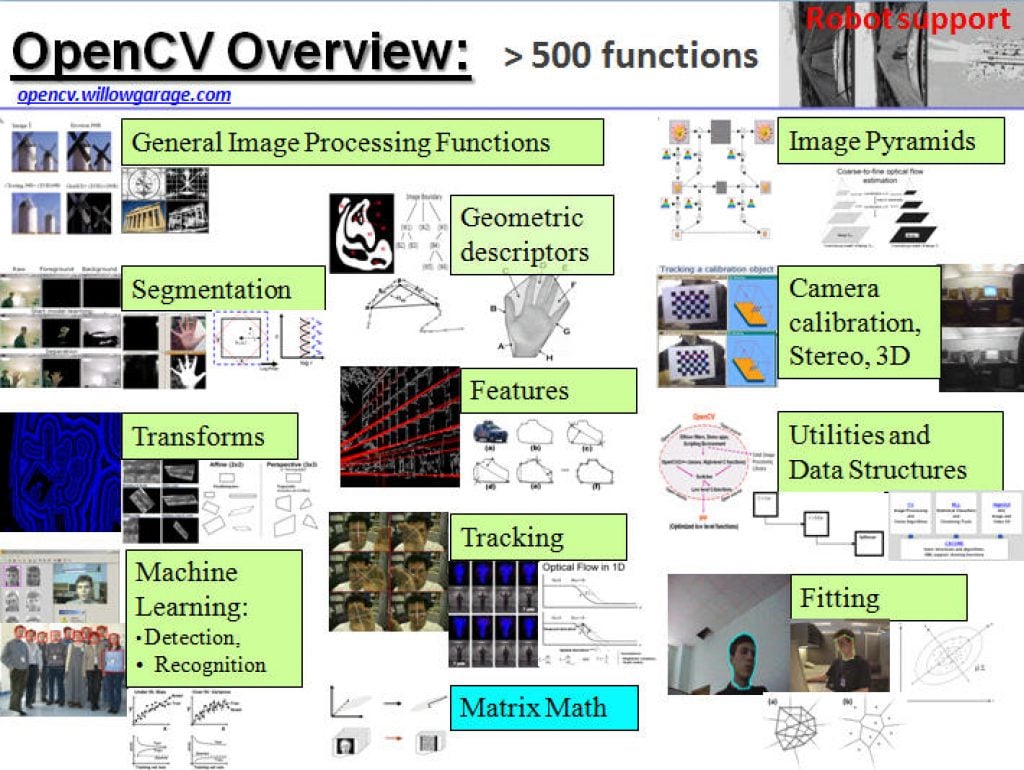

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

10 Common Mistakes in Data Science Projects and How to Avoid Them

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. While Data Science is an established and highly esteemed profession with strong foundations in science, it is important to remember that it is still a craft and, as such, it is susceptible to errors coming from processes

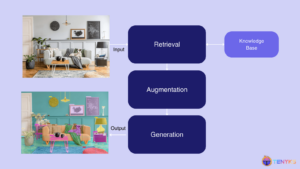

RAG for Vision: Building Multimodal Computer Vision Systems

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. This article explores the exciting world of Visual RAG, exploring its significance and how it’s revolutionizing traditional computer vision pipelines. From understanding the basics of RAG to its specific applications in visual tasks and surveillance, we’ll examine

Qualcomm AI Research Makes Diverse Datasets Available to Advance Machine Learning Research

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Qualcomm AI Research has published a variety of datasets for research use. These datasets can be used to train models in the kinds of applications most common to mobile computing, including: advanced driver assistance systems (ADAS), extended reality

Make Your Existing NVIDIA Jetson Orin Devices Faster with Super Mode

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. The NVIDIA Jetson Orin Nano Super Developer Kit, with its compact size and high-performance computing capabilities, is redefining generative AI for small edge devices. NVIDIA has dropped an exciting new update to their existing Jetson

Empowering Civil Construction with AI-driven Spatial Perception

This blog post was originally published at Au-Zone Technologies’ website. It is reprinted here with the permission of Au-Zone Technologies. Transforming Safety, Efficiency, and Automation in Construction Ecosystems In the rapidly evolving field of civil construction, AI-based spatial perception technologies are reshaping the way machinery operates in dynamic and unpredictable environments. These systems enable advanced

Can Money Buy AI Dominance?

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The announcements of the creation of Stargate last week as well as the revelation of DeepSeek-V3’s performance at a much lower cost than OpenAI and its competitors are both raising a lot

The Future of AI in Business: Trends to Watch

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. In a world increasingly shaped by the rapid evolution of artificial intelligence, 2024 stands as another momentous year, with advancements that continue to reshape how we live, work, and imagine our future. From the rapid acceleration in

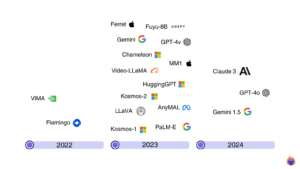

Multimodal Large Language Models: Transforming Computer Vision

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. This article introduces multimodal large language models (MLLMs) [1], their applications using challenging prompts, and the top models reshaping computer vision as we speak. What is a multimodal large language model (MLLM)? In layman terms, a multimodal

How Qualcomm is Catalyzing Retail’s AI Revolution

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Retail is embracing innovation — we’ll be showing off examples of these game-changing experiences at NRF 2025 With more places to shop than ever, physical stores are turning to AI and technology for a competitive edge. That

How Au-Zone Technologies Plays a Key Role in the Ocean Cleanup Automated Debris Imaging System

This blog post was originally published at Au-Zone Technologies’ website. It is reprinted here with the permission of Au-Zone Technologies. Founded in 2012 in Rotterdam, The Netherlands, The Ocean Cleanup is developing and scaling technologies to rid the oceans of plastic with ocean cleanup systems and river interception technologies. With over 150 employees, The Ocean

DALL-E vs Gemini vs Stability: GenAI Evaluations

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. We performed a side-by-side comparison of three models from leading providers in Generative AI for Vision. This is what we found: Despite the subjectivity involved in Human Evaluation, this is the best approach to evaluate state-of-the-art GenAI Vision

Harnessing the Power of LLM Models on Arm CPUs for Edge Devices

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. In recent years, the field of machine learning has witnessed significant advancements, particularly with the development of Large Language Models (LLMs) and image generation models. Traditionally, these models have relied on powerful cloud-based infrastructures to deliver impressive

AI On the Road: Why AI-powered Cars are the Future

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. AI transforms your driving experience in unexpected ways as showcased by Qualcomm Technologies collaborations As automotive technology rapidly advances, consumers are looking for vehicles that deliver AI-enhanced experiences through conversational voice assistants and sophisticated user interfaces. Automotive

Visual Intelligence at the Edge

This blog post was originally published at Au-Zone Technologies’ website. It is reprinted here with the permission of Au-Zone Technologies. Optimizing AI-based video telematics deployments on constrained SoCs platforms The demand for advanced video telematics systems is growing rapidly as companies seek to enhance road safety, improve operational efficiency, and manage liability costs with AI-powered

NVIDIA JetPack 6.2 Brings Super Mode to NVIDIA Jetson Orin Nano and Jetson Orin NX Modules

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The introduction of the NVIDIA Jetson Orin Nano Super Developer Kit sparked a new age of generative AI for small edge devices. The new Super Mode delivered an unprecedented generative AI performance boost of up to 1.7x

Improving Vision Model Performance Using Roboflow and Tenyks

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. When improving an object detection model, many engineers focus solely on tweaking the model architecture and hyperparameters. However, the root cause of mediocre performance often lies in the data itself. In this collaborative post between Roboflow and