“Edge Inferencing—Scalability with Intel Vision Accelerator Design Cards,” a Presentation from Intel

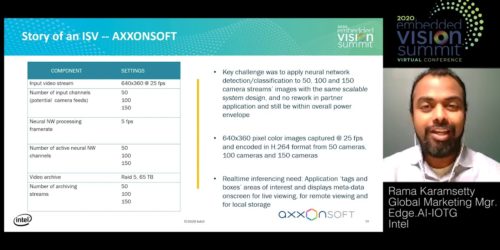

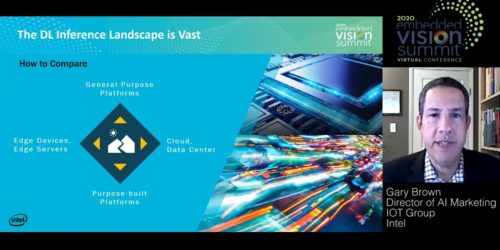

Rama Karamsetty, Global Marketing Manager at Intel, presents the “Edge Inferencing—Scalability with Intel Vision Accelerator Design Cards” tutorial at the September 2020 Embedded Vision Summit. Are you trying to deploy AI solutions at the edge, but running into scalability challenges that are making it difficult to meet your performance, power and price targets without creating […]