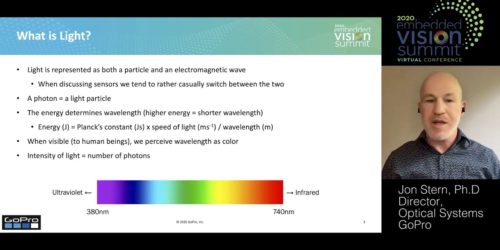

“CMOS Image Sensors: A Guide to Building the Eyes of a Vision System,” a Presentation from GoPro

Jon Stern, Director of Optical Systems at GoPro, presents the “CMOS Image Sensors: A Guide to Building the Eyes of a Vision System” tutorial at the September 2020 Embedded Vision Summit. Improvements in CMOS image sensors have been instrumental in lowering barriers for embedding vision into a broad range of systems. For example, a high […]