Resources

In-depth information about the edge AI and vision applications, technologies, products, markets and trends.

The content in this section of the website comes from Edge AI and Vision Alliance members and other industry luminaries.

All Resources

MemryX Raises $44 Million in Series B Funding for Advanced Edge AI Computing

ANN ARBOR, Mich., March 27, 2025 /PRNewswire/ — MemryX, a provider of industry leading Edge AI semiconductor solutions, announced today it has raised $44 million in Series B funding. The funding round received broad support

OpenMV Demonstration of Its New N6 and AE3 Low Power Python Programmable AI Cameras and Other Products

Kwabena Agyeman, President and Co-founder of OpenMV, demonstrates the company’s latest edge AI and vision technologies and products at the March 2025 Edge AI and Vision Alliance Forum. Specifically, Agyeman demonstrates the company’s new N6

Andes Technology Demonstration of Its RISC-V IP in a Spherical Image Processor and Meta’s AI Accelerator

Marc Evans, Director of Business Development and Marketing at Andes Technology, demonstrates the company’s latest edge AI and vision technologies and products at the March 2025 Edge AI and Vision Alliance Forum. Specifically, Evans demonstrates

RGo Robotics Implements Vision-based Perception Engine on Qualcomm SoCs for Robotics Market

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Mobile robotics developers equip their machines to behave autonomously in the real world by generating facility maps,

Visidon Exhibiting at ISC West

Meet Our Team at the Premier U.S. Security Trade Event We’re excited to announce that we’ll be showcasing our latest security and surveillance innovations at ISC West 2025, the leading security trade event in the

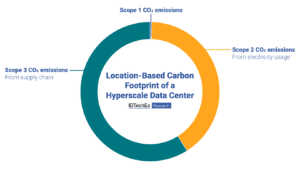

Key Insights from Data Centre World 2025: Sustainability and AI

Scope 2 power-based emissions and Scope 3 supply chain emissions make the biggest contribution to the data center’s carbon footprint. IDTechEx’s Sustainability for Data Centers report explores which technologies can reduce these emissions. Major data

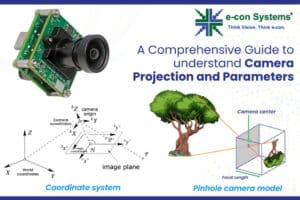

A Comprehensive Guide to Understand Camera Projection and Parameters

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Understanding camera projection and parameters is essential for mapping the 3D world into a 2D

Explaining Tokens — The Language and Currency of AI

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Under the hood of every AI application are algorithms that churn through data in their own language,

The Silent Threat to AI Initiatives

This blog post was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. The single, most common reason why most AI projects fail is not technical. Having spent almost 15

Technologies

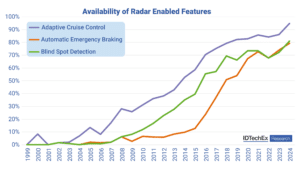

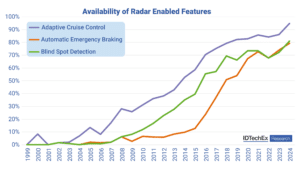

The Rising Role of Radar in the Future of ADAS and Autonomous Driving

Availability of radar-enabled features. IDTechEx‘s report, “Automotive Radar Market 2025-2045: Robotaxis & Autonomous Cars“, predicts the automotive radar market will hit 500 million annual sales in 2041. This article takes a look at the role of radar in the future of advanced driver-assistance systems (ADAS) and autonomous driving, including market segmentation, regulatory catalysts, and regional

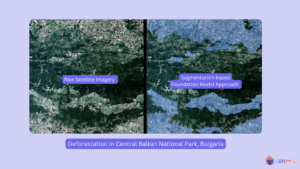

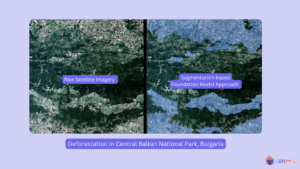

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 2)

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2, we explore how Foundation Models can be leveraged to track deforestation patterns. Building upon the insights from our Sentinel-2 pipeline and Central Balkan case study, we dive into the revolution that foundation models have

Emerging Memory and Storage Technology 2025-2035: Markets, Trends, Forecasts

For more information, visit https://www.idtechex.com/en/research-report/emerging-memory-and-storage-technology-2025-2035-markets-trends-forecasts/1088. Yearly market size for memory & storage market will exceed $300B by 2035 The explosion of AI and HPC workloads, the rising demand for data centers and cloud storage, and the expansion of IoT and edge computing are driving the need for next-generation memory and storage solutions, as well as

Applications

The Rising Role of Radar in the Future of ADAS and Autonomous Driving

Availability of radar-enabled features. IDTechEx‘s report, “Automotive Radar Market 2025-2045: Robotaxis & Autonomous Cars“, predicts the automotive radar market will hit 500 million annual sales in 2041. This article takes a look at the role of radar in the future of advanced driver-assistance systems (ADAS) and autonomous driving, including market segmentation, regulatory catalysts, and regional

BrainChip Partners with RTX’s Raytheon for AFRL Radar Contract

Laguna Hills, Calif. – April 1st, 2025 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event-based, neuromorphic AI, today announced that it is partnering with Raytheon Company, an RTX (NYSE: RTX) business, to service a contract for $1.8M from the Air Force Research Laboratory

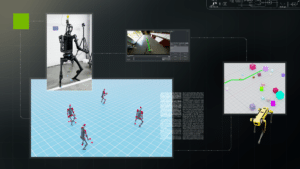

R²D²: Advancing Robot Mobility and Whole-body Control with Novel Workflows and AI Foundation Models from NVIDIA Research

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Welcome to the first edition of the NVIDIA Robotics Research and Development Digest (R2D2). This technical blog series will give developers and researchers deeper insight and access to the latest physical AI and robotics research breakthroughs across

Functions

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 2)

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2, we explore how Foundation Models can be leveraged to track deforestation patterns. Building upon the insights from our Sentinel-2 pipeline and Central Balkan case study, we dive into the revolution that foundation models have

A New Standard in Facial Recognition Security: Multispectral Imaging Technology

This blog post was originally published at Namuga Vision Connectivity’s website. It is reprinted here with the permission of Namuga Vision Connectivity. Traditional facial recognition technology has greatly enhanced both convenience and security. However, it still struggles to differentiate between real faces and sophisticated forgeries like silicone masks, printed photos and 3D models. Enter MultiSpectral

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 1)

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. Satellite imagery has revolutionized how we monitor Earth’s forests, offering unprecedented insights into deforestation patterns. In this two-part series, we explore both traditional and cutting-edge approaches to forest monitoring, using Bulgaria’s Central Balkan National Park as our