Technologies

scroll to learn more or view by subtopic

The listing below showcases the most recently published content associated with various AI and visual intelligence functions.

View all Posts

“Object Detection Models: Balancing Speed, Accuracy and Efficiency,” a Presentation from Union.ai

Sage Elliott, AI Engineer at Union.ai, presents the “Object Detection Models: Balancing Speed, Accuracy and Efficiency,” tutorial at the May 2025 Embedded Vision Summit. Deep learning has transformed many aspects of computer vision, including object detection, enabling accurate and efficient identification of objects in images and videos. However, choosing the… “Object Detection Models: Balancing Speed,

What Is The Role of Embedded Cameras in Smart Warehouse Automation?

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Cameras ensure that warehouse automation systems use visual data to function with consistency. It helps identify, track, and interact in real time. Discover how warehouse automation cameras work, their use cases, and critical imaging features.

AI-enhanced In-cabin Sensing Systems

As the trend of vehicle intelligence enhancement rises, in-cabin sensing systems will be largely responsible for increased communication, sensitivity, and smart features within cars. IDTechEx‘s report, “In-Cabin Sensing 2025-2035: Technologies, Opportunities, and Markets“, provides the latest technology developments within the sector, along with forecasts for their uptake over the next ten years. Where AI meets

D3 Embedded, HTEC, Texas Instruments and Tobii Pioneer the Integration of Single-camera and Radar Interior Sensor Fusion for In-cabin Sensing

The companies joined forces to develop sensor fusion based interior sensing for enhanced vehicle safety, launching at the InCabin Europe conference on October 7-9. Rochester, NY – October 6, 2025 – Tobii, with its automotive interior sensing branch Tobii Autosense, together with D3 Embedded, and HTEC today announced the development of an interior sensing solution

“Lessons Learned Building and Deploying a Weed-killing Robot,” a Presentation from Tensorfield Agriculture

Xiong Chang, CEO and Co-founder of Tensorfield Agriculture, presents the “Lessons Learned Building and Deploying a Weed-Killing Robot” tutorial at the May 2025 Embedded Vision Summit. Agriculture today faces chronic labor shortages and growing challenges around herbicide resistance, as well as consumer backlash to chemical inputs. Smarter, more sustainable approaches… “Lessons Learned Building and Deploying

How Do Speed Cameras Make the Roads Safer?

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Speed cameras play a crucial role in promoting safer roads. They can detect, capture, and record instances of speeding. Get insights into how these cameras work and their major road safety use cases. Managing road

“Understanding Human Activity from Visual Data,” a Presentation from Sportlogiq

Mehrsan Javan, Chief Technology Officer at Sportlogiq, presents the “Understanding Human Activity from Visual Data” tutorial at the May 2025 Embedded Vision Summit. Activity detection and recognition are crucial tasks in various industries, including surveillance and sports analytics. In this talk, Javan provides an in-depth exploration of human activity understanding,… “Understanding Human Activity from Visual

STMicroelectronics and Tobii Enter Mass Production of Breakthrough Interior Sensing Technology

Starting mass production of an advanced interior sensing system for a premium European carmaker for enhanced driver and passenger monitoring Cost-effective single-camera solution combines Tobii’s interior-sensing technology and ST’s imaging sensors to deliver wide-angle, high-quality imaging in daytime and nighttime environments Stockholm, Sweden; Geneva, Switzerland – October 2, 2025 — Tobii, the global leader in

PerCV.ai: How a Vision AI Platform and the STM32N6 can Turn Around an 80% Failure Rate for AI Projects

This blog post was originally published at STMicroelectronics’ website. It is reprinted here with the permission of STMicroelectronics. The vision AI platform PerCV.ai (pronounced Perceive AI), could be the secret weapon that enables a company to deploy an AI application when so many others fail. The solution from Irida Labs, a member of the ST

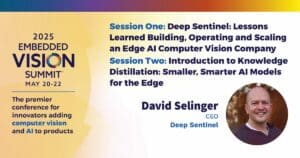

“Deep Sentinel: Lessons Learned Building, Operating and Scaling an Edge AI Computer Vision Company,” a Presentation from Deep Sentinel

David Selinger, CEO of Deep Sentinel, presents the “Deep Sentinel: Lessons Learned Building, Operating and Scaling an Edge AI Computer Vision Company” tutorial at the May 2025 Embedded Vision Summit. Deep Sentinel’s edge AI security cameras stop some 45,000 crimes per year. Unlike most security camera systems, they don’t just… “Deep Sentinel: Lessons Learned Building,