Resources

In-depth information about the edge AI and vision applications, technologies, products, markets and trends.

The content in this section of the website comes from Edge AI and Vision Alliance members and other industry luminaries.

All Resources

Upcoming In-person Event from Andes Technology Explores the RISC-V Ecosystem

On April 29, 2025 from 9:00 AM to 6:00 PM PT, Alliance Member company Andes Technology will deliver the RISC-V CON Silicon Valley event at the DoubleTree Hotel by Hilton in San Jose, CA. Jeff

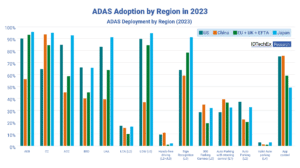

L2+ ADAS Outpaces L3 in Europe, US$4B by 2042

14 ADAS Features Deployed in EU. Privately owned Level 3 autonomous vehicles encountered significant regulatory setbacks in 2017 when Audi attempted to pioneer the market with the L3-ready A8. Regulatory uncertainty quickly stalled these ambitions,

Exploring the COCO Dataset

This article was originally published at 3LC’s website. It is reprinted here with the permission of 3LC. The COCO dataset is a cornerstone of modern object detection, shaping models used in self-driving cars, robotics, and

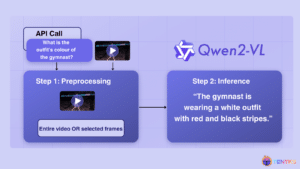

Video Understanding: Qwen2-VL, An Expert Vision-language Model

This article was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. Qwen2-VL, an advanced vision language model built on Qwen2 [1], sets new benchmarks in image comprehension across varied

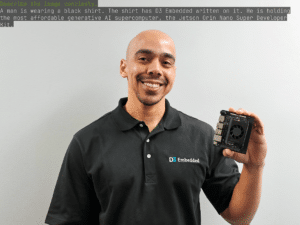

Removing the Barriers to Edge and Generative AI in Embedded Vision

This blog post was originally published at Macnica’s website. It is reprinted here with the permission of Macnica. The introduction of artificial intelligence (AI) has ushered in exciting new applications for surveillance cameras and other

Powering IoT Developers with Edge AI: the Qualcomm RB3 Gen 2 Kit is Now Supported in the Edge Impulse Platform

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The Qualcomm RB3 Gen 2 Development Kit has been designed to help you develop high-performance IoT and

Intel Accelerates AI at the Edge Through an Open Ecosystem

Intel empowers partners to seamlessly integrate AI into existing infrastructure with its new Intel AI Edge Systems, Edge AI Suites and Open Edge Platform software. What’s New: Intel is unveiling its new Intel® AI Edge Systems,

D3 Embedded to Showcase Innovative Industrial and Physical AI Solutions at NVIDIA GTC

D3 Embedded to demonstrate real-time edge AI solutions built on powerful NVIDIA platforms and frameworks including NVIDIA Isaac™ Perceptor, Holoscan Sensor Bridge, Jetson Orin™, and NVIDIA IGX Orin™, at GTC. Rochester, NY – March 18,

Radar-enhanced Safety for Advancing Autonomy

Front and side radars may have different primary uses and drivers for their innovation, but together, they form a vital part of ADAS for autonomous vehicles. IDTechEx‘s report, “Automotive Radar Market 2025-2045: Robotaxis & Autonomous

Technologies

The Rising Role of Radar in the Future of ADAS and Autonomous Driving

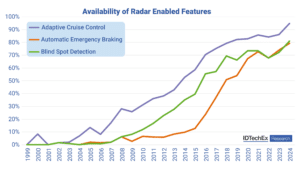

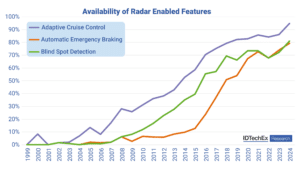

Availability of radar-enabled features. IDTechEx‘s report, “Automotive Radar Market 2025-2045: Robotaxis & Autonomous Cars“, predicts the automotive radar market will hit 500 million annual sales in 2041. This article takes a look at the role of radar in the future of advanced driver-assistance systems (ADAS) and autonomous driving, including market segmentation, regulatory catalysts, and regional

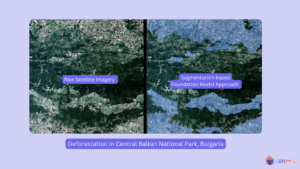

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 2)

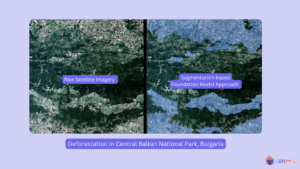

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2, we explore how Foundation Models can be leveraged to track deforestation patterns. Building upon the insights from our Sentinel-2 pipeline and Central Balkan case study, we dive into the revolution that foundation models have

Emerging Memory and Storage Technology 2025-2035: Markets, Trends, Forecasts

For more information, visit https://www.idtechex.com/en/research-report/emerging-memory-and-storage-technology-2025-2035-markets-trends-forecasts/1088. Yearly market size for memory & storage market will exceed $300B by 2035 The explosion of AI and HPC workloads, the rising demand for data centers and cloud storage, and the expansion of IoT and edge computing are driving the need for next-generation memory and storage solutions, as well as

Applications

The Rising Role of Radar in the Future of ADAS and Autonomous Driving

Availability of radar-enabled features. IDTechEx‘s report, “Automotive Radar Market 2025-2045: Robotaxis & Autonomous Cars“, predicts the automotive radar market will hit 500 million annual sales in 2041. This article takes a look at the role of radar in the future of advanced driver-assistance systems (ADAS) and autonomous driving, including market segmentation, regulatory catalysts, and regional

BrainChip Partners with RTX’s Raytheon for AFRL Radar Contract

Laguna Hills, Calif. – April 1st, 2025 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event-based, neuromorphic AI, today announced that it is partnering with Raytheon Company, an RTX (NYSE: RTX) business, to service a contract for $1.8M from the Air Force Research Laboratory

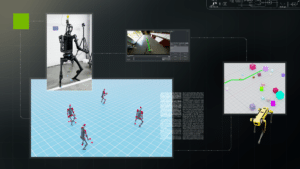

R²D²: Advancing Robot Mobility and Whole-body Control with Novel Workflows and AI Foundation Models from NVIDIA Research

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Welcome to the first edition of the NVIDIA Robotics Research and Development Digest (R2D2). This technical blog series will give developers and researchers deeper insight and access to the latest physical AI and robotics research breakthroughs across

Functions

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 2)

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In Part 2, we explore how Foundation Models can be leveraged to track deforestation patterns. Building upon the insights from our Sentinel-2 pipeline and Central Balkan case study, we dive into the revolution that foundation models have

A New Standard in Facial Recognition Security: Multispectral Imaging Technology

This blog post was originally published at Namuga Vision Connectivity’s website. It is reprinted here with the permission of Namuga Vision Connectivity. Traditional facial recognition technology has greatly enhanced both convenience and security. However, it still struggles to differentiate between real faces and sophisticated forgeries like silicone masks, printed photos and 3D models. Enter MultiSpectral

Visual Intelligence: Foundation Models + Satellite Analytics for Deforestation (Part 1)

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. Satellite imagery has revolutionized how we monitor Earth’s forests, offering unprecedented insights into deforestation patterns. In this two-part series, we explore both traditional and cutting-edge approaches to forest monitoring, using Bulgaria’s Central Balkan National Park as our